Recently I’ve had the pleasure to upgrade our REST interface at work from Asp.Net MVC3 to WebApi so I thought a lessons learned or “watch out for this” blog post was suitable, especially since I managed to do it without the need to bump any version number on our server i.e. no breaking changes.

I think there are others out there that have been using the MVC framework as a pure REST interface with no front end, i.e. dropping the V in MVC, before webapi was available.

Filters

First of all webapi is all about registering filters and message handlers and letting requests be filtered through them. A filter can either be registered globally, per controller or per action which imo already is more flexible than MVC.

public class MvcApplication : HttpApplication

{

protected void Application_Start()

{

...

GlobalConfiguration.Configuration.MessageHandlers.Add(new OptionsHandler());

GlobalConfiguration.Configuration.MessageHandlers.Add(new MethodOverrideHandler());

GlobalConfiguration.Configuration.Formatters.Insert(0, new TypedXmlMediaTypeFormatter ...);

GlobalConfiguration.Configuration.Formatters.Insert(0, new TypedJsonMediaTypeFormatter ...);

...

}

}

Metod override header

Webapi doesn’t accept the X-HTTP-Method-Override header by default, in our installations we often see that the PUT, DELETE and HEAD verbs are blocked. So I wrote the following message handler which I register in Application_Start.

public class MethodOverrideHandler : DelegatingHandler

{

private const string Header = "X-HTTP-Method-Override";

private readonly string[] methods = { "DELETE", "HEAD", "PUT" };

protected override Task SendAsync(HttpRequestMessage request, CancellationToken cancellationToken)

{

if (request.Method == HttpMethod.Post && request.Headers.Contains(Header))

{

var method = request.Headers.GetValues(Header).FirstOrDefault();

if (method != null && methods.Contains(method, StringComparer.InvariantCultureIgnoreCase))

{

request.Method = new HttpMethod(method);

}

}

return base.SendAsync(request, cancellationToken);

}

}

Exception handling filter

In our controllers we throw HttpResponseExceptions when a resource isn’t found or if the request is bad for instance, this is quite neat when you want to short-circuit the request processing pipeline and return a http error status code to the user. The thing that caught me off guard is that when throwing from a controller action the exception filter is not run, but when throwing from a filter the handler is run. After some head scratching I found a discussion thread on codeplex where it’s explained that this is intentional, so do not throw exceptions in your filters.

We have a filter which looks at the clients accept header to determine if our versions (server/client) are compatible, this was previously checked in our base controller but with webapi it felt like an obvious filtering situation and we threw Not Acceptable if we weren’t compatible. This needed to be re-written to just setting the response on the action context and not calling on the base classes OnActionExecuting which imo isn’t as clean design.

public class VersioningFilter : ActionFilterAttribute

{

public override void OnActionExecuting(HttpActionContext actionContext)

{

var acceptHeaderContents = ...;

if (string.IsNullOrWhiteSpace(acceptHeaderContents))

{

actionContext.Response = actionContext.Request.CreateErrorResponse(HttpStatusCode.NotAcceptable, "No accept header provided");

}

else if (!IsCompatibleRequestVersion(acceptHeaderContents))

{

actionContext.Response = actionContext.Request.CreateErrorResponse(HttpStatusCode.NotAcceptable, "Incompatible client version");

}

else

{

base.OnActionExecuting(actionContext);

}

}

}

Request body model binding and query params

In MVC the default model binder mapped x-www-form-encoded parameters to parameters on the action if the name and type matched, this is not the case with webapi. Prepare yourself to create classes and mark the parameters on your controller with the FromBody attribute even if you only want to pass in a simple integer that is not a part of the URI. Furthermore to get hold of the query params provided in the URL you’ll need to pass in the request URI to the static helper method ParseQueryString on the HttpUtility class. It’s exhausting but it will work and it still doesn’t break any existing implementation.

[HttpGet]

public HttpResponseMessage Foo([FromBody]MyModel bar)

{

var queryParams = HttpUtility.ParseQueryString(Request.RequestUri.Query);

string q = queryParams["q"];

...

}

Posting Files

There are plenty of examples out there on how to post a file with MVC or WebApi so I’m not going to cover that. The main difference here is that the MultipartFormDataStreamProvider needs a root path on the server that specifies where to save the file. We didn’t need to do this in MVC, we could simply get the filename from the HttpPostedFiledBase class. I haven’t found a way to just keep the file in-memory until the controller is done. I ended up with a couple of more lines of code where I create the attachments directory if it doesn’t exist, save the file and then delete it once we’ve sent the byte data to our services.

[ActionName("Index"), HttpPost]

public async Task<HttpResponseMessage> CreateAttachment(...)

{

if (!Request.Content.IsMimeMultipartContent())

{

throw new HttpResponseException(HttpStatusCode.UnsupportedMediaType);

}

string attachmentsDirectoryPath = HttpContext.Current.Server.MapPath("~/SomeDir/");

if (!Directory.Exists(attachmentsDirectoryPath))

{

Directory.CreateDirectory(attachmentsDirectoryPath);

}

var provider = new MultipartFormDataStreamProvider(attachmentsDirectoryPath);

var result = await Request.Content.ReadAsMultipartAsync(provider);

if (result.FileData.Count < 1)

{

throw new HttpResponseException(HttpStatusCode.BadRequest);

}

var fileData = result.FileData.First();

string filename = fileData.Headers.ContentDisposition.FileName;

Do stuff ...

File.Delete(fileData.LocalFileName);

return Request.CreateResponse(HttpStatusCode.Created, ...);

}

Beaking change in serialization/deserialization JavaScriptSerializer -> Newtonsoft.Json

So WebApi is shipped with the Newtonsoft.Json serializer, there are probably more differences than I noticed but date time types are serialized differently with Newtonsoft. To be sure that we didn’t break any existing implementations I implemented my own formatter which wrapped the JavaScriptSerializer and inserted it first in my formatters configuration. It is really easy to implement custom formatters, all you need to do is inherit MediaTypeFormatter.

public class TypedJsonMediaTypeFormatter : MediaTypeFormatter

{

private static readonly JavaScriptSerializer Serializer = new JavaScriptSerializer();

public TypedJsonMediaTypeFormatter(MediaTypeHeaderValue mediaType)

{

SupportedMediaTypes.Clear();

SupportedMediaTypes.Add(mediaType);

}

...

public override Task<object> ReadFromStreamAsync(Type type, Stream readStream, System.Net.Http.HttpContent content, IFormatterLogger formatterLogger)

{

var task = Task<object>.Factory.StartNew(() =>

{

var sr = new StreamReader(readStream);

var jreader = new JsonTextReader(sr);

object val = Serializer.Deserialize(jreader.Value.ToString(), type);

return val;

});

return task;

}

public override Task WriteToStreamAsync(Type type, object value, Stream writeStream, System.Net.Http.HttpContent content, System.Net.TransportContext transportContext)

{

var task = Task.Factory.StartNew(() =>

{

string json = Serializer.Serialize(value);

byte[] buf = System.Text.Encoding.Default.GetBytes(json);

writeStream.Write(buf, 0, buf.Length);

writeStream.Flush();

});

return task;

}

}

MediaFormatters Content-Type header

Any real world REST interface needs to have a custom content type format and by default the xml and json formatter always returns application/xml respectively appliation/json. This is not good enough, I suggest that you create custom implementations of JsonMediaTypeFormatter and XmlMediaTypeFormatter and insert them first in your formatters configuration. In your custom formatter just add your media type that includes the vendor and version to the SupportedMediaTypes collection. In our case we also append the server minor version to content type as a parameter, the easiest way to do that is by overriding the SetDefaultContentHeaders method and append whichever parameter you want to the header content-type header.

public class TypedXmlMediaTypeFormatter : XmlMediaTypeFormatter

{

private readonly int minorApiVersion;

public TypedXmlMediaTypeFormatter(MediaTypeHeaderValue mediaType, int minorApiVersion)

{

this.minorApiVersion = minorApiVersion;

SupportedMediaTypes.Clear();

SupportedMediaTypes.Add(mediaType);

}

...

public override void SetDefaultContentHeaders(Type type, HttpContentHeaders headers, MediaTypeHeaderValue mediaType)

{

base.SetDefaultContentHeaders(type, headers, mediaType);

headers.ContentType.Parameters.Add(new NameValueHeaderValue("minor", minorApiVersion.ToString(CultureInfo.InvariantCulture)));

}

}

I think that covers it all, good luck migrating your REST api!

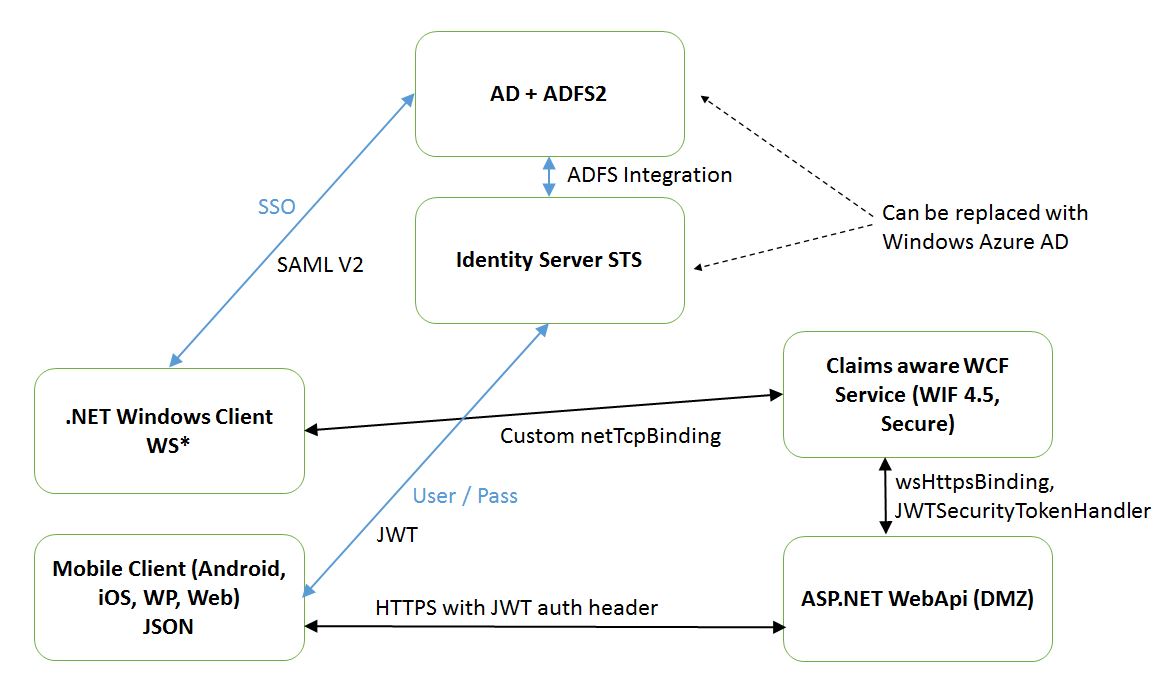

I might cover upgrading from WIF 3.5 (Microsoft.IdentityModel) to WIF 4.5 in my next post, or thinktectures startersts to identity server v2. Take a wild guess what I’ve been busy with at work! ;-)